From Turing to Today: The Evolution of Artificial Intelligence

From the personalized recommendations on our streaming services to the self-driving cars we see on the roads and the photo-realistic AI-generated content making headlines, AI is gradually weaving itself into the fabric of our daily lives. But how did we get here? I believe that understanding where we’ve come from is essential to understanding where we are heading or where we may want to go. And so, looking back is a constructive exercise. I take another historical dive in this piece, but this time from a different vantage point. We compare modern AI to old AI, uncovering the key historical developments and milestones and explore what exciting possibilities lie ahead.

Context

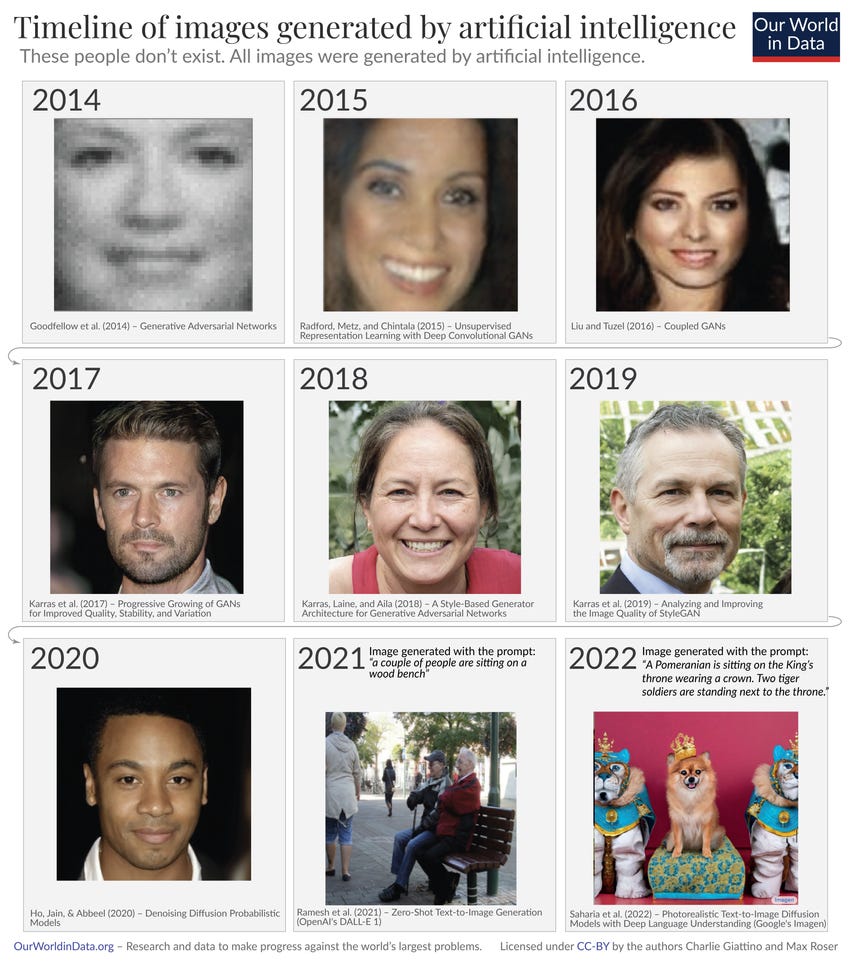

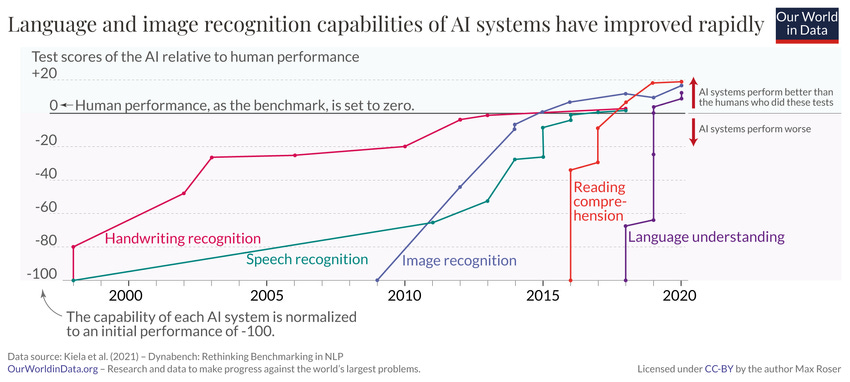

Modern AI is a product of remarkable leaps in hardware and improvements in computational power. I think the below images by Our World in Data do a great job of demonstrating this. AI systems have come far from the early days of primitive, black-and-white pixelated images and can now generate photo-realistic images from challenging prompts in seconds (Exhibit A). AI now exceeds human performance in areas like language understanding, reading comprehension, and, is at par, if not ahead on speech and handwriting recognition (Exhibit B)

Exhibit A

Exhibit B

History

Below we trace the key milestones and developments, from Turing’s vision to today’s reality that have helped advance AI from just an idea to something we use every day.

Humble Beginnings: Turing & McCarthy (1950s)

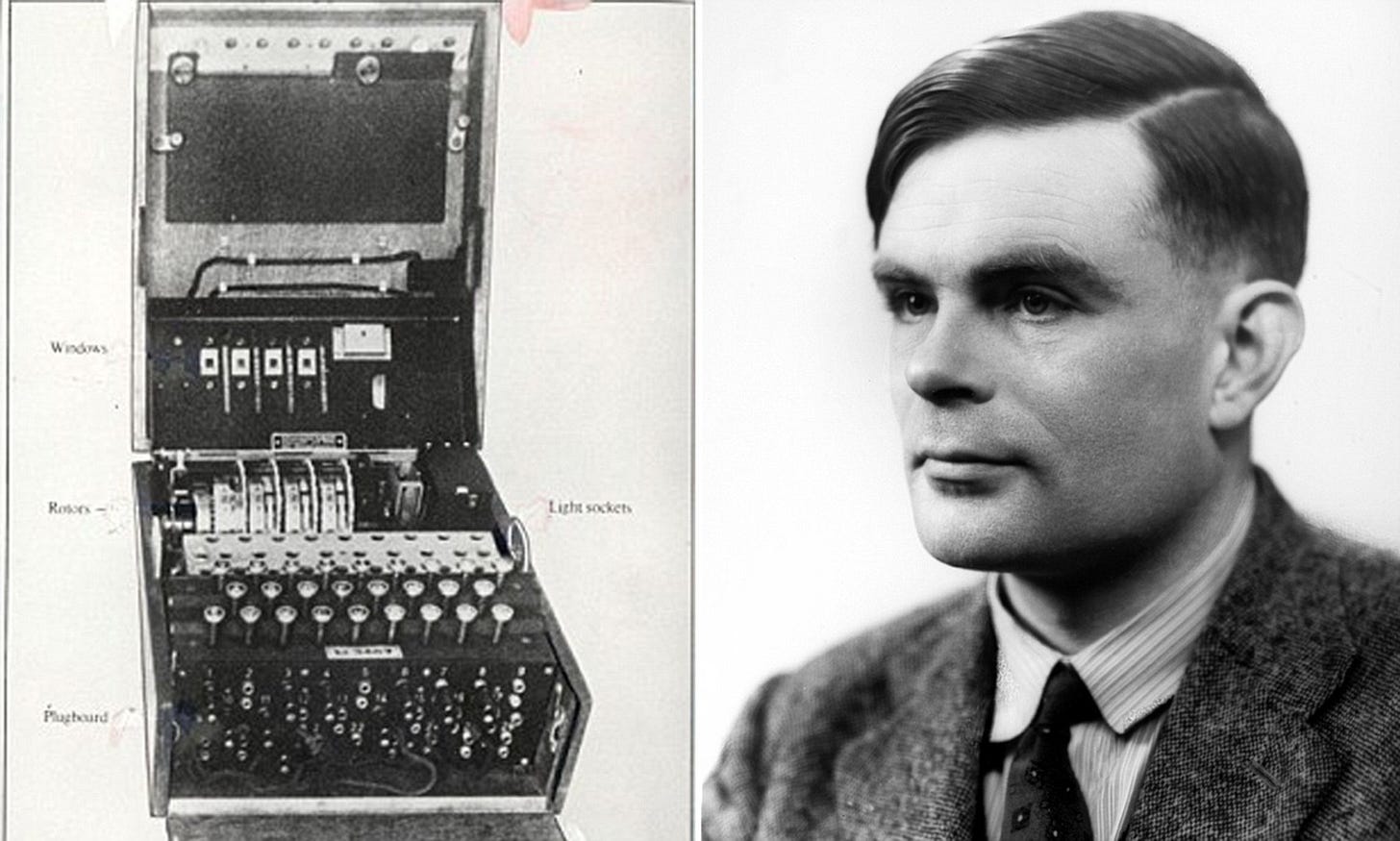

The dream of intelligent machines stretches back centuries, but its modern incarnation began with Alan Turing's groundbreaking 1950 paper, "Computing Machinery and Intelligence". He proposed a test, now known as the Turing Test, to gauge a machine's ability to exhibit intelligent behavior equivalent to that of a human.

Turing's work laid the intellectual foundation for the field of AI that took a more structured form in 1956 with the Dartmouth Summer Research Project on AI; here, researchers from various backgrounds convened to brainstorm and lay out the groundwork for intelligent machines. This conference cemented the term "Artificial Intelligence" and ignited a wave of optimism about the possibilities of machine cognition.

The golden years and AI winter (1970s)

The decades following the Dartmouth Conference were a rollercoaster for AI research. The initial excitement in this golden period saw significant advancements, including the creation of the first AI programs like ELIZA, an early chatbot, and Shakey, a robot that could navigate and manipulate objects. However, high expectations were soon tempered by the realization of the immense challenges in replicating human intelligence. This adjustment was partly due to the era's computers, which, by today's standards, had only a fraction of the needed power.

For instance, the IBM 704 mainframe, a beast that filled a room with thousands of vacuum tubes and weighed 20,000 lbs had less processing power than a modern hand calculator. Lack of robust data sets and limited storage capabilities further constrained progress leading to the AI winter of the 1970s that marked waning interest and funding for the sector. This is best captured by the remarks of AI researchers, Stuart Russell and Peter Norwig in their 1995 book called ‘Artificial Intelligence: A Modern Approach’. Critically evaluating the projections of Herbert Simon, an AI pioneer, they wrote:

“These predictions [a compuer would be a chess champion, and a significant mathematical theorem would be proved by a machine] came true (or approximately true) within 40 years rather than 10. Simon’s overconfidence was due to the promising performance of early AI systems on simple examples. In almost all cases, however, these early systems turned out to fail miserably when tried out on wider selections of problems and on more difficult problems.”

GPUs, Neural Networks, and Computer Vision (Late 1990-2010s)

#The above image is created by Microsoft Co-Pilot powered by Dall-E. It depicts neural networks (brain), parallel processing (GPUs) and computer vision (open-eye)

The resurgence of interest in AI began in the late 1990s and early 2000s, fueled by a variety of factors:

Increased computing power: The invention of the transistor revolutionized computing, allowing for smaller, faster machines. Moore's Law, the observation that the number of transistors on a chip roughly doubled every two years, fueled an exponential growth in processing power. This gave AI researchers the resources to explore more complex techniques.

Deep Learning and Neural Networks: Hardware alone couldn't make AI work and a new software paradigm was needed. This is where deep learning came in. Inspired by the human brain, deep learning uses models called artificial neural networks. These networks are like super-powered pattern recognizers, able to learn from massive amounts of data. This lets them do things that were once impossible for computers, like recognizing faces in photos or understanding spoken language.

Graphics Processing Units (GPUs): In the late 1990s, GPUs were for gamers, powering realistic 3D visuals. However, as explained in this Nvidia blog, their unique parallel processing architecture proved ideal for training deep neural networks and accelerated computing. NVIDIA, a pioneer in the space makes 90% of the GPUs that can be used in machine learning, including the A100s, which are widely known as the workhorse powering the AI boom.

Availability of data: The proliferation of smart devices, social media, and the Internet of Things generated massive amounts of data, which has served as the fuel for training AI systems to learn and improve their performance.

Cloud Computing platforms: The growth of cloud computing platforms, such as Amazon Web Services, Google Cloud Platform, and Microsoft Azure, has provided the necessary infrastructure and resources to host and scale AI applications and models.

It is the collective impact of these developments that has made what was previously thought impossible a reality. For instance, computer vision is a field reborn thanks to this pairing of GPUs with deep learning algorithms. In the pre-deep learning era, image recognition software struggled to differentiate between a cat and a dog. However, with deep learning algorithms like convolutional neural networks (image-focused machine learning models), achieving near-human accuracy in image recognition has become a reality. This breakthrough has applications in everything from self-driving cars to medical diagnosis.

Further software advancements & specialized hardware (2010s-Present)

Transformer models, introduced in the paper "Attention is All You Need" in 2017, have revolutionized natural language processing, enabling breakthroughs in machine translation, text generation, and more. A transformer model is a neural network that learns context and thus meaning by tracking relationships in sequential data like the words in this sentence. They do this by applying the ‘self-attention’ mechanism that helps them detect subtle ways even distant data elements in a series influence and depend on each other. Transformers are used every time we search on Google or Microsoft Bing.

The success and increasing demands placed on transformer models for even higher performance led companies like Google to innovate further, not just in software but also in hardware. For instance, Google developed the Tensor Processing Unit (TPU) around 2016, a piece of specialized hardware designed specifically for the workloads of deep learning, accelerating training and deployment of large AI models.

In just the past decade, we've witnessed one staggering AI breakthrough after another – from DeepMind's AlphaGo program's mastery of the ancient game of Go to OpenAI's DALL-E and GPT models exhibiting stunning capabilities in image and text generation. AI can now understand and generate our messy, nuanced languages better than ever. We see this in eerily conversational chatbots and translation tools that are redefining human-machine relationships.

Looking Forward

Reflecting on the evolution of AI, it is clear we've come a long way. So, what's next? While it's impossible to predict the future perfectly, I've got some ideas that I hope will at least offer some food for thought to those of you who are curious.

a) Potential investment bubble, but technology will endure: An impending AI winter will likely follow once the victors in the field begin to emerge. Right now, the buzz surrounding AI's potential outpaces the concrete achievements, largely because our understanding is still catching up to our ambitions. But I don’t want to mix up the hype around investing in AI with the actual progress in the technology itself. Think back to when the dotcom bubble burst. It didn't take down the internet; instead, it cleared the deck for the strongest players to thrive. We're likely looking at something similar with AI. The technology isn't going anywhere, but as soon as the funding frenzy cools off, we can expect a lot of today's start-ups to vanish.

b) Models should keep improving: Models have only improved over time and are much better than they used to be; still, they are far from perfect. OpenAI’s CEO Sam Altman, recently said, “This is the stupidest the models will ever be’, and, ‘ChatGPT-4 kinda sucks’. This is what I remind myself every time ChatGPT spits out something unhelpful.

c) Software → AI: In 2011, Marc Andreessen famously articulated the growing dominance of software in the global economy with his Wall Street Journal op-ed titled "Why Software is Eating the World". Now, Jensen Huang has taken this idea a step further by suggesting that AI is eating software. Whether AI will prove to be an enhancement to SaaS or be at its very heart, is hard to say but this much can be comfortably said: the shift from traditional software to AI is a pivotal technological shift, and those who fail to adapt or prepare for this shift risk being eclipsed by those who embrace it.

d) AI Models are not without flaws: From the subtle encoding of bias & discrimination to the thorny issues of data privacy, the advent of deep fakes, and tangible security threats, the path toward an AI-led future is laden with ethical hurdles and societal challenges. Navigating this intricate maze is not only important but essential.

Thank you | What’s next?

This is all from my end. Thank you for reading this! As I’ve said before, I’m not an expert and, my view is just one among many perspectives but what I am is curious, deeply interested, and, excited at the prospect of diving in and sharing my learnings. I’d love to hear what you think, where you agree or disagree and, what I may have missed!

My favorite reflection period is a quarter (and yes, my background in equities investing may have something to do with it). Practically speaking, a quarterly update is regular enough for the long-term thinker, while hopefully also being more digestible and better prioritized over a daily/weekly newsletter. And so, for my next blog, I plan to do a quarterly wrap of the biggest AI developments over the last three months. It has been a fun, exciting, and eventful quarter and I hope to bring you an engaging piece that not only captures the developments but also highlights why they matter. Stay tuned and subscribe to my substack if you’d like my posts straight into your inbox.